Can machines understand language the way humans do—or are they just elegantly predicting what comes next? That question sits at the heart of modern Natural Language Processing (NLP). While humans read between the lines, machines rely on statistical patterns. But can those patterns replicate true understanding?

Can Machines Understand Language – or Just Predict It Elegantly?

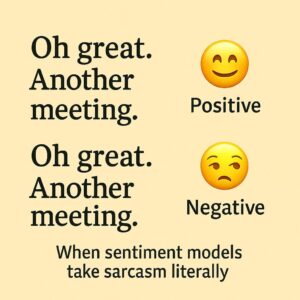

When you hear someone say, “Oh, great. Another meeting,” you instantly know if they mean it sincerely-or really don’t. That understanding comes from more than just processing words. Your brain reads tone, recalls past conversations, and interprets.

You’re not just hearing language—you’re decoding meaning. But machines? They run patterns. Predict the next word. Score probabilities. Call it elegant computation.

And that brings us to a key question at the heart of natural language processing (NLP): Can machines ever replicate human perspective?

Let’s explore.

Humans vs. Machines: Who Understands Better?

We humans are context machines- We don’t just hear words, rather feel them by decode facial expressions, tone of voice, body language, cultural references, even the silence between words.

Take the word: Fine. Now say it out aloud. Imagine your partner saying it. Now imagine your boss. Now your mom, texting it-with a period at the end.

Same word. Very different vibes.

That’s the magic of context. And it’s what makes language both powerful and slippery—especially for machines.

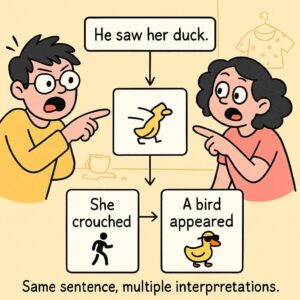

Let’s Play a Game: He Saw Her Duck.

Read this sentence: He saw her duck.

What popped into your head?

Did she crouch suddenly? Or did you imagine a feathery animal waddling by?

Welcome to linguistic ambiguity. Language is often more suggestion than statement. And for machines that lack human experience, this ambiguity can cause real trouble.

Why Context Is the Secret Sauce of Language

Humans thrive on context. We instinctively factor in:

- Tone and pacing

- Cultural norms

- Body language

- Past experiences

- Personal relationships

- What’s left unsaid

We understand what someone means even when the words say something else entirely. Machines? They don’t have that lived layer. They only see patterns—huge statistical ones, yes, but patterns, nonetheless.

Machines vs. Meaning: A Philosophical Detour

To answer, “Can machines understand language?”, we need to ask what understanding actually is.

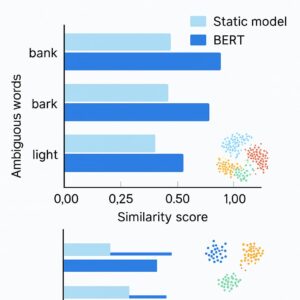

When a model like BERT or GPT reads a sentence, it doesn’t think. It doesn’t understand a “bank” as a river’s edge or a place to store money. It only sees word vectors—mathematical versions of language—and guesses what’s likely to come next.

So, is understanding just advanced pattern recognition? Or is it something more?

Let’s test it. Let’s Test BERT: Can It Spot the Difference? – We’ll use BERT to compare how it interprets the word “bank” in two very different contexts.

Here’s the code:

# Save this as app.py and run with streamlit run app.py

import streamlit as st

import torch

from transformers import BertTokenizer, BertModel

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

st.title("Can Machines Understand Context?")

st.subheader("Explore ambiguity and meaning using BERT embeddings")

st.markdown("""

Type two sentences containing the *same word* in different contexts.

We’ll extract contextual embeddings using BERT and measure how different their meanings appear to the model.

""")

sentence1 = st.text_input("Sentence 1", "He sat on the river bank and watched the water.")

sentence2 = st.text_input("Sentence 2", "She went to the bank to deposit cash.")

target_word = st.text_input("Target word (must appear in both sentences)", "bank")

@st.cache_resource

def load_model():

tokenizer = BertTokenizer.from_pretrained("bert-base-uncased")

model = BertModel.from_pretrained("bert-base-uncased", output_hidden_states=True)

model.eval()

return tokenizer, model

def get_embedding(sentence, word, tokenizer, model):

inputs = tokenizer(sentence, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

tokens = tokenizer.convert_ids_to_tokens(inputs["input_ids"][0])

try:

idx = tokens.index(word)

except ValueError:

return None, tokens

hidden_states = outputs.hidden_states

embeddings = torch.stack(hidden_states[-4:]).sum(0).squeeze(0)

return embeddings[idx].numpy(), tokens

if sentence1 and sentence2 and target_word:

tokenizer, model = load_model()

emb1, tokens1 = get_embedding(sentence1, target_word, tokenizer, model)

emb2, tokens2 = get_embedding(sentence2, target_word, tokenizer, model)

if emb1 is not None and emb2 is not None:

sim = cosine_similarity([emb1], [emb2])[0][0]

st.success(f"Cosine similarity between '{target_word}' meanings: *{sim:.4f}*")

st.caption("A lower score means more contextual difference.")

else:

st.warning(f"Could not find '{target_word}' in both token lists.")

st.text(f"Tokens in sentence 1: {tokens1}")

st.text(f"Tokens in sentence 2: {tokens2}")

What Happens?

BERT shows that the word bank in those two sentences has different meanings—because its embeddings (vector representations) differ.

It’s not understanding in a human sense—but it’s impressive.

It’s like BERT saying: I don’t know what a river is, but I’ve seen enough sentences to guess this ‘bank’ is probably about water—not money.

But can it handle something messier?

The Sarcasm Test: When Machines Get Played

Here’s a sentence: “Oh, perfect. Another meeting that could’ve been an email.”

Most sentiment analyzers say: Positive. Why? Because sarcasm wears the costume of positivity while stabbing it in the back. To understand sarcasm, you need to understand intent. And that’s something machines struggle with.

Another one: He’s about as polite as a punch to the face.

Try feeding that to a model. You’ll get the wrong vibe—guaranteed.

So… Do Machines Understand Language?

Let’s give credit where it’s due. NLP models are stunning achievements by autocomplete emails. Also, they translate text with shocking fluency and even write poems.

But let’s be honest: They’re guessing.

Statistically, yes. Elegantly, absolutely.

But they don’t know what a duck is. Or a punch. Or why your mom’s “Fine.” is basically a threat.

Can you explain why “fine.” from your mom feels threatening- but not when your co-worker says it? What invisible knowledge are you using?

They are mirrors—reflecting our patterns, our logic, and our contradictions.

Maybe Understanding Requires Something Else

And yet… The line between guessing really well and understanding is blurrier than we like to admit.

What if understanding isn’t just seeing patterns—but feeling them?

Maybe it’s the memory of a bad Monday. The hesitation in someone’s voice. The lived experience behind a word.

Until machines have that, they’ll keep speaking.

But can they mean?

Really thought-provoking piece. It’s fascinating how models like GPT or BERT can mimic understanding so well, yet completely miss things like sarcasm or emotional nuance. The ‘Fine.’ example hit hard—machines might replicate patterns, but that unspoken context is still uniquely human. Curious to see how far models can go before they stop guessing and start understanding. Great read!

Fantastic site Lots of helpful information here I am sending it to some friends ans additionally sharing in delicious And of course thanks for your effort

I loved as much as you will receive carried out right here The sketch is tasteful your authored subject matter stylish nonetheless you command get got an edginess over that you wish be delivering the following unwell unquestionably come further formerly again as exactly the same nearly very often inside case you shield this hike

Wonderful beat I wish to apprentice while you amend your web site how could i subscribe for a blog web site The account aided me a acceptable deal I had been a little bit acquainted of this your broadcast provided bright clear idea

I loved as much as you will receive carried out right here The sketch is tasteful your authored subject matter stylish nonetheless you command get got an edginess over that you wish be delivering the following unwell unquestionably come further formerly again as exactly the same nearly very often inside case you shield this hike

obviously like your website but you need to test the spelling on quite a few of your posts Several of them are rife with spelling problems and I to find it very troublesome to inform the reality on the other hand Ill certainly come back again

Somebody essentially lend a hand to make significantly posts I might state That is the very first time I frequented your web page and up to now I surprised with the research you made to create this particular put up amazing Excellent job

Somebody essentially lend a hand to make significantly posts I might state That is the very first time I frequented your web page and up to now I surprised with the research you made to create this particular put up amazing Excellent job

s4vnfe

I loved as much as you will receive carried out right here The sketch is tasteful your authored subject matter stylish nonetheless you command get got an edginess over that you wish be delivering the following unwell unquestionably come further formerly again as exactly the same nearly very often inside case you shield this hike